Let me start this article describing the problem I had with finding a proper title for it. As some of you may already know I write a series called “Data Science for Losers” which comprises of several articles that describe different tools, methods and libraries one can use to explore the vast datascientific fields. And just a few days ago, while finishing my Data Science and ML Essentials course, I discovered that Azure ML has a built-in support for Jupyter and Python which, of course, made it very interesting to me because it makes Azure ML an ideal ground for experimentation. […]

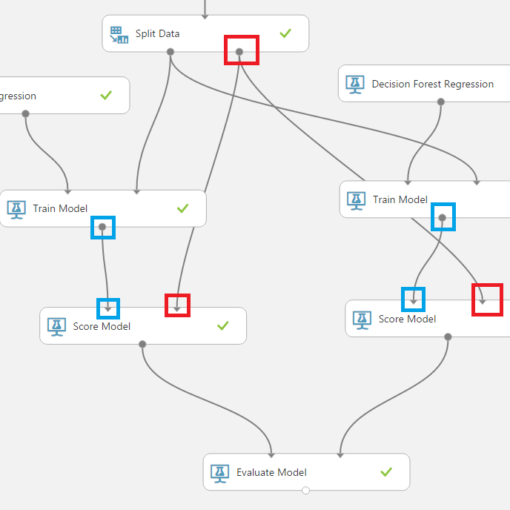

In this article we’ll explore Microsoft’s Azure Machine Learning environment and how to combine Cloud technologies with Python and Jupyter. As you may know I’ve been extensively using them throughout this article series so I have a strong opinion on how a Data Science-friendly environment should look like. Of course, there’s nothing against other coding environments or languages, for example R, so your opinion may greatly differ from mine and this is fine. Also AzureML offers a very good R-support! So, feel free to adapt everything from this article to your needs. And before we begin, a few words about how I came […]

Julia is what I’d like Python to be: dynamic but fast like C, supporting strong typing without being dogmatic (in both directions: static vs. dynamic), with a powerful REPL and many modules written in the same language (so I don’t have to switch to C). Julia is still a new language and I suppose not many of us use it currently in production. And yes, Julia is an ‘academic’ language, with a strong emphasis on technical/scientific computing, but honestly, would you rather like to run your business on an ‘anti-scientific’ / ‘anti-technical’ language? Yes, I know, it sounds very polemic because there […]

Sometimes, the hardest part in writing is completing the very first sentence. I began to write the “Loser’s articles” because I wanted to learn a few bits on Data Science, Machine Learning, Spark, Flink etc., but as the time passed by the whole degenerated into a really chaotic mess. This may be a “creative” chaos but still it’s a way too messy to make any sense to me. I’ve got a few positive comments and also a lot of nice tweets, but quality is not a question of comments or individual twitter-frequency. Do these texts properly describe “Data Science”, or at […]

It’s been a while since I wrote my last article. A Big-Data Sorry to my “massive” audience. Actually, I was planning to write a follow-up to the last article on Machine Learning but could not find enough time to complete it. Also, I’ll soon give a presentation in a Meetup (in Germany). A classical example on what happens when you have to complete several tasks at the same time. In the end all of them will fail. But I’ll try to compensate it with yet another task: by writing an article about the brand-new Apache Flink v0.10.0 and its DataStream API. 😀 As always, […]

Douglas Crockford once said that people who finally understand Monads immediately lose the capability to explain them to others. Well, the few readers of this chaotic blog are lucky: neither I understand them nor am able to explain them anyway. However, I can say in advance that a Monad in Scala is something that implements two methods: map and flatMap. Haskell coders (luckily, they’re certainly not reading this blog) now would say: No, there’s no flatMap but only bind written as >>=. Yes, I know but anyway, we’ll stick with flatMap. And to make this article somewhat cooler we’ll use a […]

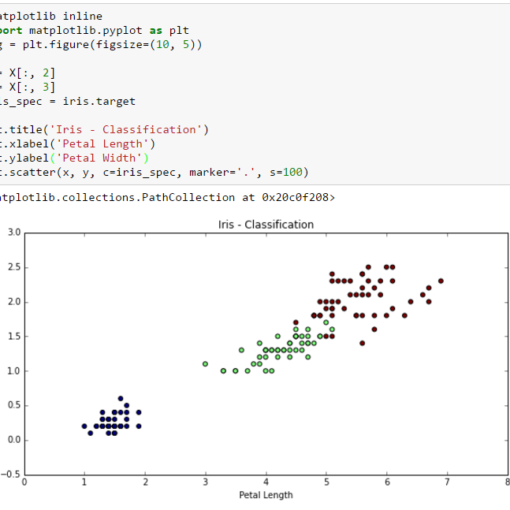

It’s been a while since I’ve written an article on Data Science for Losers. A big Sorry to my readers. But I don’t think that many people are reading this blog. Now let’s continue our journey with the next step: Machine Learning. As always the examples will be written in Python and the Jupyter Notebook can be found here. The ML library I’m using is the well-known scikit-learn. What’s Machine Learning From my non-scientist perspective I’d define ML as a subset of the Artificial Intelligence research which develops self-learning (or self-improving?) algorithms that try to gain knowledge from data and make predictions […]

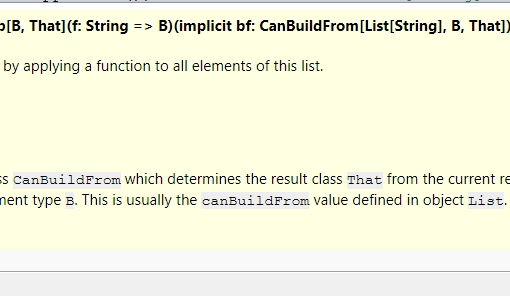

In the last article from the Data Science for Losers series I’ve used a few mini examples in Scala to show how Apache Spark works. Granted, I’m still not sure if this was a “good” idea at all but regarding the fact that the whole series degenerated into something really chaotic a few harmless lines in Scala wouldn’t make it worse anyway. However, the much lower retweet-rate of the last article made it clear that the jump from my bad Python code to my even worse Scala code wasn’t very well accepted. Well, I think it’s time for a crash course in Scala […]

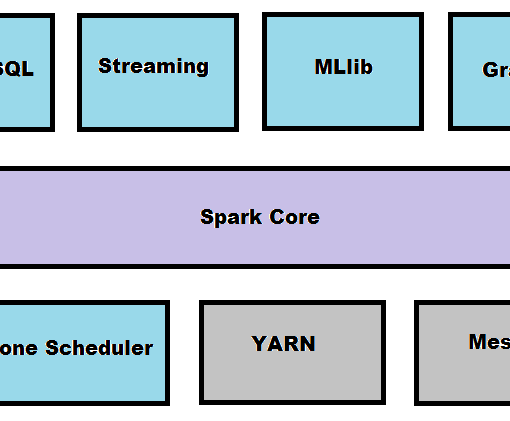

I’ve already mentioned Apache Spark and my irrational plan to integrate it somehow with this series but unfortunately the previous articles were a complete mess so it has had to be postponed. And now, finally, this blog entry is completely dedicated to Apache Spark with examples in Scala and Python. The notebook for this article can be found here. Apache Spark Definition By its own definition Spark is a fast, general engine for large-scale data processing. Well, someone would say: but we already have Hadoop, so why should we use Spark? Such a question I’d answer with a remark that Hadoop is EJB reinvented and […]

This should have been the third part of the Loser’s article series but as you may know I’m trying very hard to keep the overall quality as low as possible. This, of course, implies missing parts, misleading explanations, irrational examples and an awkward English syntax (it’s actually German syntax covered by English-like semantics 😳 ). And that’s why we now have to go through this addendum and not the real Part Three about using Apache Spark with IPython. The notebook can be found here. So, let’s talk about a few features from Pandas I’ve forgot to mention in the last two articles. Playing SQL with DataFrames Pandas is wonderful because of […]