Douglas Crockford once said that people who finally understand Monads immediately lose the capability to explain them to others. Well, the few readers of this chaotic blog are lucky: neither I understand them nor am able to explain them anyway. However, I can say in advance that a Monad in Scala is something that implements two methods: map and flatMap. Haskell coders (luckily, they’re certainly not reading this blog) now would say: No, there’s no flatMap but only bind written as >>=. Yes, I know but anyway, we’ll stick with flatMap. And to make this article somewhat cooler we’ll use a […]

Monthly Archives: October 2015

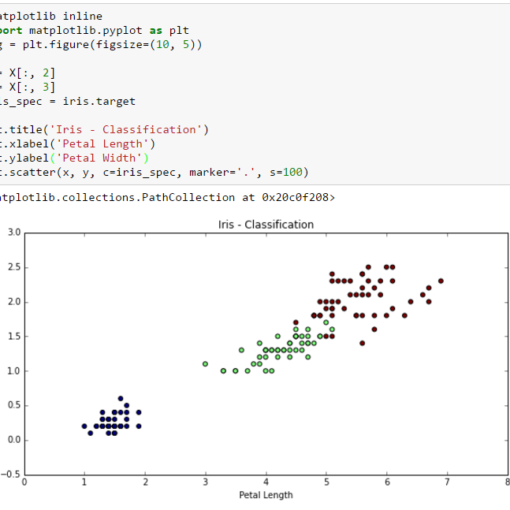

It’s been a while since I’ve written an article on Data Science for Losers. A big Sorry to my readers. But I don’t think that many people are reading this blog. Now let’s continue our journey with the next step: Machine Learning. As always the examples will be written in Python and the Jupyter Notebook can be found here. The ML library I’m using is the well-known scikit-learn. What’s Machine Learning From my non-scientist perspective I’d define ML as a subset of the Artificial Intelligence research which develops self-learning (or self-improving?) algorithms that try to gain knowledge from data and make predictions […]

In the last article from the Data Science for Losers series I’ve used a few mini examples in Scala to show how Apache Spark works. Granted, I’m still not sure if this was a “good” idea at all but regarding the fact that the whole series degenerated into something really chaotic a few harmless lines in Scala wouldn’t make it worse anyway. However, the much lower retweet-rate of the last article made it clear that the jump from my bad Python code to my even worse Scala code wasn’t very well accepted. Well, I think it’s time for a crash course in Scala […]

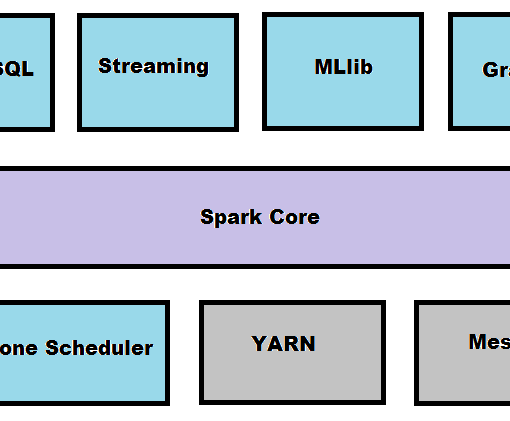

I’ve already mentioned Apache Spark and my irrational plan to integrate it somehow with this series but unfortunately the previous articles were a complete mess so it has had to be postponed. And now, finally, this blog entry is completely dedicated to Apache Spark with examples in Scala and Python. The notebook for this article can be found here. Apache Spark Definition By its own definition Spark is a fast, general engine for large-scale data processing. Well, someone would say: but we already have Hadoop, so why should we use Spark? Such a question I’d answer with a remark that Hadoop is EJB reinvented and […]

This should have been the third part of the Loser’s article series but as you may know I’m trying very hard to keep the overall quality as low as possible. This, of course, implies missing parts, misleading explanations, irrational examples and an awkward English syntax (it’s actually German syntax covered by English-like semantics 😳 ). And that’s why we now have to go through this addendum and not the real Part Three about using Apache Spark with IPython. The notebook can be found here. So, let’s talk about a few features from Pandas I’ve forgot to mention in the last two articles. Playing SQL with DataFrames Pandas is wonderful because of […]

In the first article we’ve learned a bit about Data Science for Losers. And the most important message, in my opinion, is that patterns are everywhere but many of them can’t be immediately recognized. This is one of the reasons why we’re digging deep holes in our databases, data warehouses, and other silos. In this article we’ll use a few more methods from Pandas’ DataFrames and generate plots. We’ll also create pivot tables and query an MS SQL database via ODBC. SqlAlchemy will be our helper in this case and we’ll see that even Losers like us can easily merge and filter SQL tables without touching the […]

Anaconda Installation To do some serious statistics with Python one should use a proper distribution like the one provided by Continuum Analytics. Of course, a manual installation of all the needed packages (Pandas, NumPy, Matplotlib etc.) is possible but beware the complexities and convoluted package dependencies. In this article we’ll use the Anaconda Distribution. The installation under Windows is straightforward but avoid the usage of multiple Python installations (for example, Python3 and Python2 in parallel). It’s best to let Anaconda’s Python binary be your standard Python interpreter. Also, after the installation you should run these commands: conda update conda conda update “conda” […]

The incredibly fast Parallel DOM is surely the most famous of all the features Ractive.JS provides but there’s more. With Adaptors you can extend Ractive.JS so it can communicate with other libraries, modules or components. In this article we’ll create an adaptor for a query-service that delivers JSON data. The demo app is located here. The sources are here. Project Structure The project contains four JavaScripts and a simple template: main.html query-service.js, for querying JSON data by using the new fetch-API query-helper.js, which acts as a module that’ll be wrapped by the adapter query-adapter.js, which Ractive.JS uses to access the query-helper main.js, […]

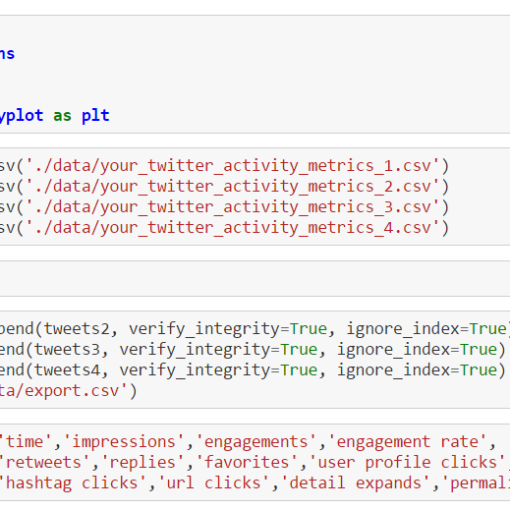

This article covers an older version of the source code linked below. A few additional options, like filtering spam-tweets, came in but the base functionality remained the same. If you experience any problems using the newer versions, please, leave a comment. I am a Twitter-Addict. Most of my coding-related stuff comes from links and suggestions via Twitter. And of course I’m using it everywhere, from desktop to smartphone, while travelling, or at work. My carefully defined feeds are always fresh & flowing. However, sometimes I just want to have all the interesting tweets collected in one place without any external […]